Consumer Reports has raised serious safety concerns about Tesla’s Full Self-Driving beta that is currently being tested by owners on public roads.

For quite some time, Tesla has allowed select owners to install and test its advanced driver-assistance system and recently, the Full Self-Driving beta 9 system was released. Beta testing is far from a new phenomenon but it is unique to Tesla in the automotive space and according to Consumer Reports, it lacks adequate safeguards.

“Videos of FSD beta 9 in action don’t show a system that makes driving safer or even less stressful,” senior director of Consumer Reports’ Auto Test Center Jake Fisher says. “Consumers are simply paying to be test engineers for developing technology without adequate safety protection.”

Most other car manufacturers and technology companies testing and developing self-driving systems are either doing so on private roads or solely with trained safety drivers behind the wheels of prototypes. Not only has Tesla gone down a different path but it isn’t even monitoring drivers in real time that are testing its systems.

“Tesla just asking people to pay attention isn’t enough – the system needs to make sure people are engaged when the system is operational,” Fisher added. “We already know that testing developing self-driving systems without adequate driver support can – and will – end in fatalities.”

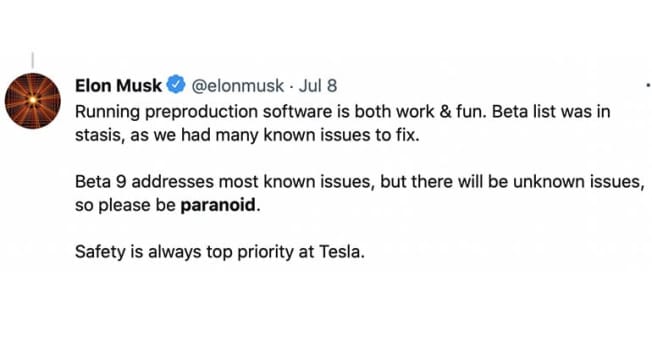

In promoting the latest Full Self-Driving beta, Elon Musk admitted that the system has issues and cautioned users.

“Beta 9 address most known issues, but there will be unknown issues, so please be paranoid,” he wrote on Twitter. “Safety is always top priority at Tesla.”

Consumer Reports pinpoints one particular video uploaded to YouTube as an example of the limitations and dangers of the beta. In the video, a Tesla Model 3 makes numerous mistakes and at one stage, scrapes against a bush and after a left-hand turn, heads straight towards a parked car, forcing the driver to take control.

“It’s hard to know just by watching these videos what the exact problem is, but just watching the videos it’s clear (that) it’s having an object detection and/or classification problem,” Duke University automation expert Missy Cummings said. According to her, the system struggles to determine what the objects it perceives are or what to do with that information, or both.

Executive director of the Center for Auto Safety, Jason Levine, also has serious concerns with Tesla’s beta-testing.

“Vehicle manufacturers who choose to beta-test their unproven technology on both the owners of their vehicles and the general public without consent at best set back the cause of safety and at worst result in preventable crashes and deaths,” he said.