Semi-autonomous vehicles are becoming increasingly common, so it’s a bit alarming that researchers were able to trick a Tesla Model 3 that was using Navigate on Autopilot

According to Regulus Cyber, they purchased a $400 GPS spoofer and a $150 jammer online. They then placed a small spoofing antenna on the roof of the Model 3 to simulate an external attack, while also limiting its effect on other GPS receivers.

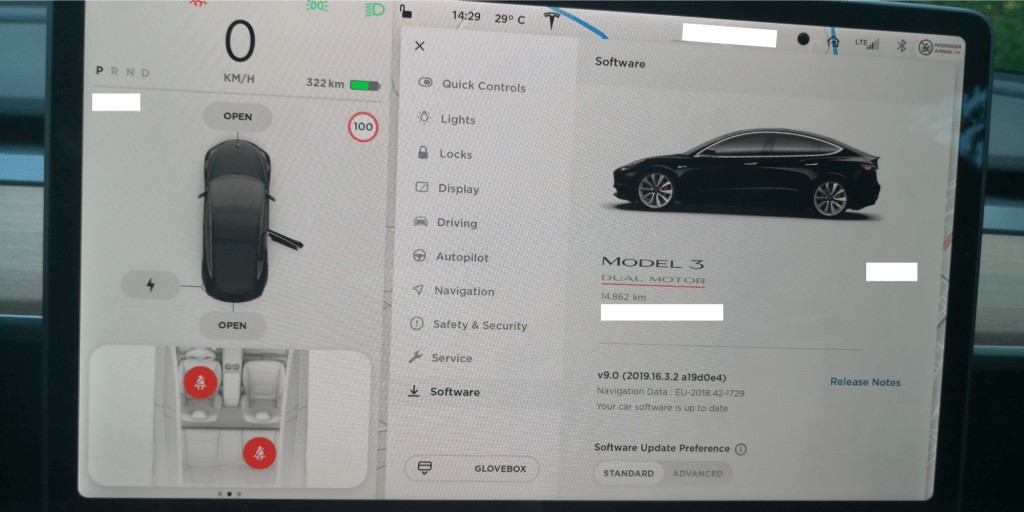

As part of the test, researchers traveled on a highway at 59 mph (95 km/h) while using Navigate on Autopilot. The route would take them to a nearby town and require the car to make an autonomous exit.

However, the team transmitted fake satellite coordinates which were picked up by the GPS receiver in the Model 3. This caused the car to think it needed to exit the highway in 500 feet (152.4 meters), when in reality the actual exit was still over a mile away.

As a result, the car rapidly slowed down to 15 mph (24 km/h) and turned onto an emergency turn off instead of the exit. Researchers said the driver had his hands off the steering wheel at the time of the attack and when he regained control of the vehicle, it was “too late to attempt to maneuver back to the highway safely.”

Regulus Cyber says Navigate on Autopilot uses GPS and Google map data to determine what lanes the vehicle should be in and what exits to take. This makes it susceptible to GPS spoofing attacks. In particular, the company says the car was successfully spoofed several times and this caused “extreme deceleration and acceleration, rapid lane changing suggestions, unnecessary signaling, multiple attempts to exit the highway at incorrect locations and extreme driving instability.”

While the spoofing attack was only designed to target the Model 3, researchers said people with more nefarious intentions could buy a high-gain directional antenna which would increase the spoofer’s range to nearly a mile. Adding an amplifier to the mix could extend the spoofer’s range to a “few miles.”

The company also tested a Tesla Model S, but the spoofing did not have any impact on the actual driving. However, the vehicle’s air suspension would change “unexpectedly” as the car was lead to believe it was traveling on surfaces it wasn’t.

Regulus Cyber reached out to Tesla following their Model S test – and before the Model 3 experiment – and was told “Any product or service that uses the public GPS broadcast system can be affected by GPS spoofing, which is why this kind of attack is considered a federal crime.” Tesla went on to say the effects of a spoofing attack would be minimal, but that “hasn’t stopped us from taking steps to introduce safeguards in the future which we believe will make our products more secure against these kinds of attacks.”

Despite these assurances, Regulus Cyber CEO Yonatan Zur said “We have ongoing research regarding this threat as we believe it’s an issue that needs solving. These new semi-autonomous features offered on new cars place drivers at risk and provides us with a dangerous glimpse of our future as passengers in driverless cars.”

H/T to Bloomberg